Which Graphic Card Is Best for AI

"Is graphic card important for AI?"

Besides the technological development of A.I., the computer system also needs to handle it. Since GPU technology is advancing at a remarkable rate, GPUs have become very common for machine learning purposes. Especially when you enhance or upscale the old video using AVCLabs Video Enhancer AI, a strong GPU will accelerate the speed. So which graphic card is best for AI?

Part 1: Why Do We Need A Strong Graphic Card for AI

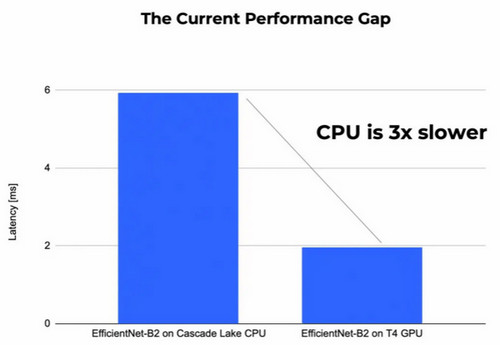

CPUs are everywhere and can serve as more cost-effective options for running AI-based solutions compared to GPUs. However, finding models that are both accurate and can run efficiently on CPUs can be a challenge. Generally speaking, GPUs are 3X faster than CPUs. Here’s an example to serve as a reference for the rest of this post. The next graph shows the latency of an EfficientNet-B2 model on two different hardware: T4 GPU and Intel Cascade Lake CPU. As you can see, a forward pass on a CPU is 3X slower than a forward pass on a GPU.

Part 2: Why Graphic Card Is Important for AI

The longest and most resource-intensive phase of most deep learning implementations is the training phase. This phase can be accomplished in a reasonable amount of time for models with smaller numbers of parameters but as your number increases, your training time does as well. This has a dual cost; your resources are occupied for longer and your team is left waiting, wasting valuable time.

Graphical processing units (GPUs) can reduce these costs, enabling you to run models with massive numbers of parameters quickly and efficiently. This is because GPUs enable you to parallelize your training tasks, distributing tasks over clusters of processors, and performing compute operations simultaneously.

GPUs are also optimized to perform target tasks, finishing computations faster than non-specialized hardware. These processors enable you to process the same tasks faster and free your CPUs for other tasks. This eliminates bottlenecks created by computing limitations.

Part 3: How to Choose A Suitable Graphic Card

We need to consider the following factors when choosing a suitable graphic card:

1. Ability to interconnect GPUs: When choosing a GPU, you need to consider which units can be interconnected. Interconnecting GPUs is directly tied to the scalability of your implementation and the ability to use multi-GPU and distributed training strategies. Typically, consumer GPUs do not support interconnection (NVlink for GPU interconnects within a server and Infiniband/RoCE for linking GPUs across servers) and NVIDIA has removed interconnections on GPUs below RTX 2080.

2. Supporting software: NVIDIA GPUs are the best supported in terms of machine learning libraries and integration with common frameworks, such as PyTorch or TensorFlow. The NVIDIA CUDA toolkit includes GPU-accelerated libraries, a C and C++ compiler and runtime, and optimization and debugging tools. It lets you start immediately without worrying about building custom integrations.

Part 4: Recommendations for Graphic Card

The following are GPUs recommended for use in large-scale AI projects.

1. NVIDIA Tesla A100: The A100 is a GPU with Tensor Cores that incorporates multi-instance GPU (MIG) technology. It was designed for machine learning, data analytics, and HPC. The Tesla A100 is meant to be scaled to up to thousands of units and can be partitioned into seven GPU instances for any size workload. Each Tesla A100 provides up to 624 teraflops performance, 40GB memory, 1,555 GB memory bandwidth, and 600GB/s interconnects.

2. NVIDIA Tesla V100: The NVIDIA Tesla V100 is a Tensor Core enabled GPU that was designed for machine learning, deep learning, and high performance computing (HPC). It is powered by NVIDIA Volta technology, which supports tensor core technology, specialized for accelerating common tensor operations in deep learning. Each Tesla V100 provides 149 teraflops of performance, up to 32GB memory, and a 4,096-bit memory bus.

3. NVIDIA Tesla P100: The Tesla P100 is a GPU based on an NVIDIA Pascal architecture that is designed for machine learning and HPC. Each P100 provides up to 21 teraflops of performance, 16GB of memory, and a 4,096-bit memory bus.

4. NVIDIA Tesla K80: The Tesla K80 is a GPU based on the NVIDIA Kepler architecture that is designed to accelerate scientific computing and data analytics. It includes 4,992 NVIDIA CUDA cores and GPU Boost™ technology. Each K80 provides up to 8.73 teraflops of performance, 24GB of GDDR5 memory, and 480GB of memory bandwidth.

5. Google TPU: Slightly different are Google’s tensor processing units (TPUs). TPUs are chip or cloud-based, application-specific integrated circuits (ASIC) for deep learning. These units are specifically designed for use with TensorFlow and are available only on Google Cloud Platform. Each TPU can provide up to 420 teraflops of performance and 128 GB high bandwidth memory (HBM). There are also pod versions available that can provide over 100 petaflops of performance, 32TB HBM, and a 2D toroidal mesh network.

Bonus: AI-based Video Quality Enhancer Software - AVCLabs Video Enhancer AI

Which graphic card is best for AI? There isn't really any definitive answer to this question as it depends on a number of factors, including what type of AI you are using and what your budget is. If you are a user who wants to enhance or upscale a video quality and resolution, during our testing of using AVCLabs Video Enhancer AI, the Nvidia's GeForce GTX 1080 Ti is excellent in the processing. You can also download AVCLabs Video Enhancer AI for a try!

Key Features of AVCLabs Video Enhancer AI

- Upscale low-res videos to 1080p, 4K, and 8K

- Remove video noise and restore facial details

- Colorize B&W videos to bring them to life

- Blur all unwanted parts to protect privacy

- Stabilize footage by removing camera shake

Conclusion

In conclusion, we have explored why a strong graphic card is essential for AI to run properly. We discussed how the graphic card can be used to process data more efficiently and why it is important for AI. Furthermore, we examined how to choose an appropriate graphic card depending on what you plan on using your AI system for and made some recommendations of our own based on the current market. Finally, as a bonus, we introduced AVCLabs Video Enhancer AI - an innovative video quality enhancer software designed specifically with AI in mind. With this technology at hand, it has never been easier to upgrade your existing videos with improved quality and resolution. To ensure optimal performance from your AI system, choosing the right graphic card is now simpler than ever before!